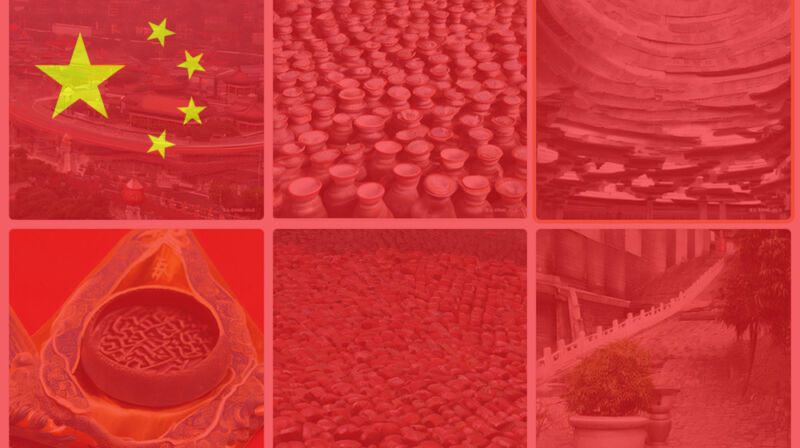

China’s most advanced AI image generator already blocks political content

Ars Technica

China’s major text-to-graphic synthesis model, Baidu’s ERNIE-ViLG, censors political text this kind of as “Tiananmen Sq.” or names of political leaders, stories Zeyi Yang for MIT Technologies Review.

Impression synthesis has demonstrated popular (and controversial) recently on social media and in online artwork communities. Equipment like Steady Diffusion and DALL-E 2 make it possible for people to build pictures of practically just about anything they can visualize by typing in a text description named a “prompt.”

In 2021, Chinese tech company Baidu created its possess graphic synthesis product termed ERNIE-ViLG, and while tests public demos, some customers located that it censors political phrases. Pursuing MIT Technology Review’s in-depth report, we ran our have take a look at of an ERNIE-ViLG demo hosted on Hugging Encounter and confirmed that phrases these types of as “democracy in China” and “Chinese flag” are unsuccessful to create imagery. Rather, they create a Chinese language warning that somewhere around reads (translated), “The enter articles does not meet the suitable principles, be sure to adjust and check out all over again!”

Ars Technica

Encountering limitations in picture synthesis is not one of a kind to China, even though so far it has taken a distinct kind than state censorship. In the situation of DALL-E 2, American agency OpenAI’s articles plan restricts some types of written content these as nudity, violence, and political articles. But that is a voluntary decision on the portion of OpenAI, not owing to force from the US authorities. Midjourney also voluntarily filters some information by key word.

Secure Diffusion, from London-primarily based Steadiness AI, comes with a designed-in “Security Filter” that can be disabled thanks to its open supply mother nature, so almost something goes with that model—depending on wherever you operate it. In particular, Stability AI head Emad Mostaque has spoken out about wanting to steer clear of governing administration or company censorship of impression synthesis versions. “I think folks should be absolutely free to do what they think best in producing these designs and services,” he wrote in a Reddit AMA reply previous week.

It’s unclear irrespective of whether Baidu censors its ERNIE-ViLG design voluntarily to prevent probable difficulty from the Chinese federal government or if it is responding to prospective regulation (such as a federal government rule regarding deepfakes proposed in January). But considering China’s historical past with tech media censorship, it would not be surprising to see an formal restriction on some forms of AI-produced articles quickly.